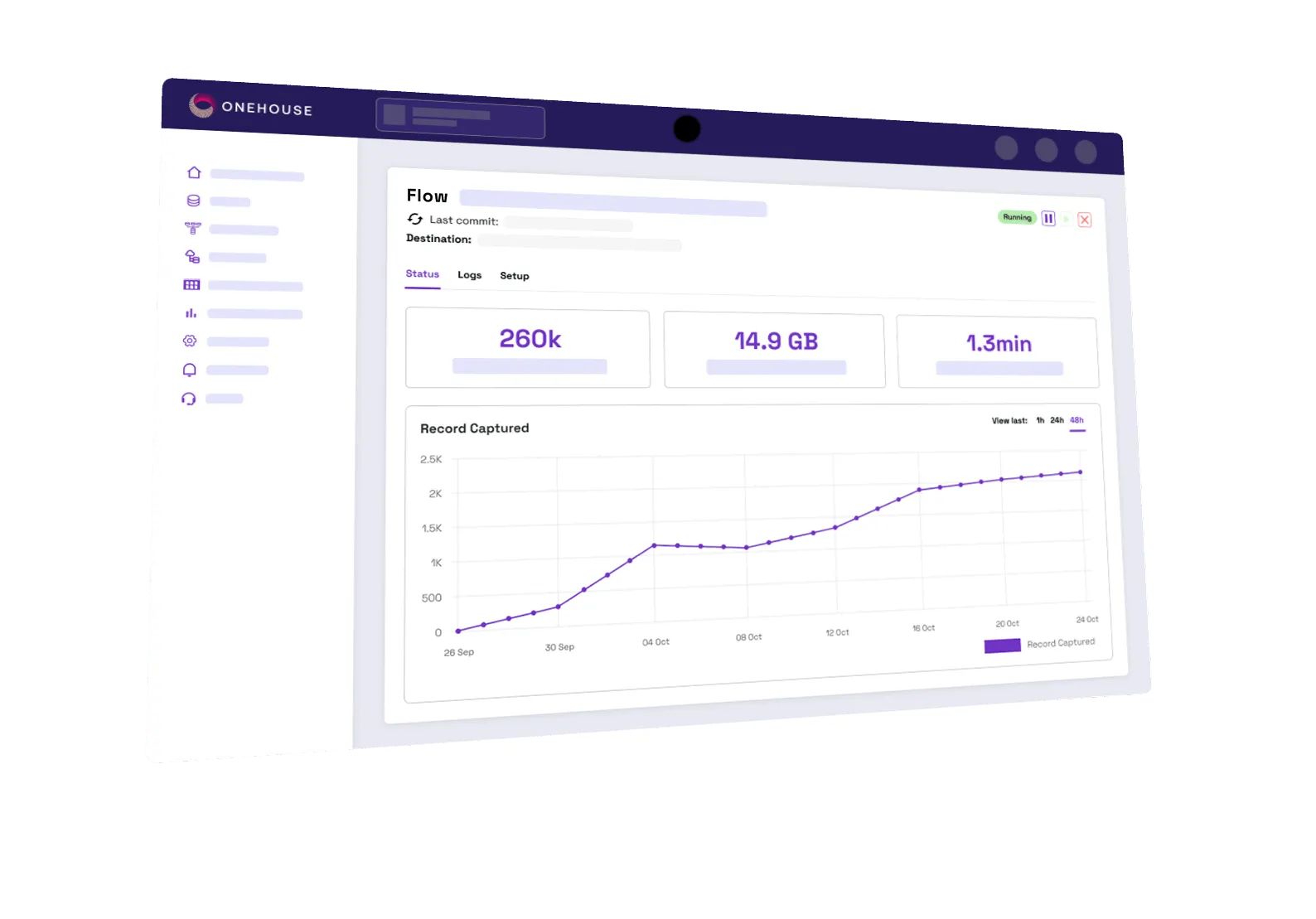

OneFlow Data Ingestion

The fastest ingestion of all your data into Apache Hudi™, Apache Iceberg™, and Delta Lake tables in your cloud storage. OneFlow ingestion delivers industry leading performance at a fraction of the cost thanks to incremental processing.

Managed Data Ingestion

Ingest data directly from any flavor of Apache Kafka™.

Replicate operational databases such as PostgreSQL, MySQL, SQL Server, and MongoDB to the data lakehouse and materialize all updates, deletes, and merges for near real-time analytics.

Monitor your cloud storage buckets for any file changes, and rapidly ingest all of your Avro, CSV, JSON, Parquet, Proto, XML, and more into optimized lakehouse tables.

Deliver Powerful Performance at a Fraction of the Cost

Fast ingestion to keep up with source systems in near real-time.

Automatically scale compute to handle bursts in data ingestion without having to provision and monitor clusters.

Run on low-cost compute optimized for ETL/ELT with simple usage-based pricing.

Incrementally Transform Your Data

Transform data with industry-leading incremental processing technology for ETL/ELT to deliver data in near real-time.

Efficiently process only data that has changed to reduce compute costs and processing time.

Write data pipelines and transformations with a no-code or low-code UI with schema previews.

Leverage pre-built transformations or bring your own to securely transform data in your own VPC.

Achieve Quality that Counts

Set and enforce expectations of data quality. Quarantine bad records in-flight.

Never miss a byte. Onehouse continuously monitors your data sources for new data and seamlessly handles schema changes.

Adapt to schema changes as data is ingested, so upstream sources don’t disrupt the delivery of high-quality data.

Find Out More About Accelerated Ingestion & ETL with Onehouse

Support all major data lakehouse formats

Eliminate data lakehouse format friction. Work seamlessly across Apache Hudi, Apache Iceberg, and Delta Lake.

Leverage interoperability between Apache Hudi, Apache Iceberg, and Delta Lake

Eradicate lock-in with the most robust set of data lakehouse format and data catalog compatibility.

Provide abstractions and tools for the translation of lakehouse table format metadata.

Accelerated Spark Runtime for Apache Hudi, Apache Iceberg and Delta Lake

With Onehouse Compute Runtime as the foundation of your data lakehouse, accelerate queries 2-30x and cut infrastructure costs 20-80%.

How Customers Use OneFlow Today

Onehouse works with a variety of customers from large enterprises to startups who are starting their data journey. We have experience working across all verticals from Technology, Finance, Healthcare, Retail, and beyond. See what customers are doing with Onehouse today:

Full Change Data Capture

A Onehouse customer with large deployments of MySQL has many transactional datasets. With Onehouse they extract changelogs and create low-latency CDC pipelines to enable analytics ready Hudi tables on S3.

.svg)

Real-time machine learning pipelines

An insurance company uses Onehouse to help them generate real-time quotes for customers on their website. Onehouse helped access untapped datasets and reduced the time to generate an insurance quote from days/weeks to < 1 hour.

.svg)

Replace long batch processing time

A large tech SaaS company used Onehouse’s technology to reduce their batch processing times from 3+ hours to under 15 minutes all while saving ~40% on infrastructure costs. Replacing their DIY Spark jobs with a managed service, they can now operate their platform with a single engineer.

.svg)

Ingest Clickstream data

A talent marketplace company uses Onehouse to ingest all clickstream events from their mobile apps. They run multi-stage incremental transformation pipelines through Onehouse and query the resulting Hudi tables with BigQuery, Presto, and other analytics tools.

.svg)

.svg)

Do you have a similar story?

Streamlined Data Ingestion with Onehouse

Continuously replicate data in near-real time, manage high-volume event streams, and transfer files across various sources, while enjoying the flexibility and affordability of the Universal Data Lakehouse architecture.

How Does Onehouse Fit In?

You have questions, we have answers

A Lakehouse is an architectural pattern that combines the best capabilities of a data lake and a data warehouse. Data lakes built on cloud storage like S3 are the cheapest and most flexible ways to store and process your data, but they are challenging to build and operate. Data warehouses are turn-key solutions, offering capabilities traditionally not possible on a lake like transaction support, schema enforcement, and advanced performance optimizations around clustering, indexing, etc.

Now with the emergence of Lakehouse technologies like Apache Hudi, you can unlock the power of a warehouse directly on the lake for orders of magnitude cost savings.

While born from the roots of Apache Hudi and founded by its original creator, Onehouse is not an enterprise fork of Hudi. The Onehouse product and its services leverage OSS Hudi, to offer a data lake platform similar to what companies like Uber have built while adding optimizations such as our Onehouse Compute Runtime. We remain fully committed to contributing to and supporting the rapid growth and development of Hudi as the industry leading lakehouse platform.

No, Onehouse offers services that are complementary to Databricks, Snowflake, or any other data warehouse or lake query engine. Our mission is to accelerate your time to adoption of a lakehouse architecture. We focus on foundational data infrastructure that are left out as DIY struggles today in the data lake ecosystem. If you plan to use Databricks, Snowflake, EMR, BigQuery, Athena, or Starburst, we can help accelerate and simplify your adoption of these services. Onehouse interoperates with Delta Lake and Apache Iceberg to better support Databricks and Snowflake queries respectively through the Apache XTable (incubating) project.

Onehouse delivers its management services on a data plane inside of your cloud account. Unlike many vendors, this ensures no data ever leaves the trust boundary of your private networks and sensitive production databases are not exposed externally. You maintain ownership of all your data in your personal S3, GCS, or other cloud storage buckets. Onehouse’ commitment to openness is to ensure your data is future-proof. As of this writing, Onehouse is SOC2 Types I and II and PCI DSS compliant. We are also multi-cloud available.

If you have data in RDMS databases, event streams, or even data lost inside data swamps, Onehouse can help you ingest, transform, manage, and make all of your data available in a fully managed lakehouse. Since we don’t build a query engine, we don’t play favorites and focus simply on making your underlying data to be performant and interoperable to any and all query engines.

If you are considering a data lake architecture to either offload costs from a cloud warehouse or unlock data science and machine learning, Onehouse can provide standardization around how you build your data lake ingestion pipelines and leverage the battle-tested and industry leading technologies like Apache Hudi, to achieve your goals at much reduced cost and efforts.

Onehouse meters how many compute-hours are used to deliver its services and we charge an hourly compute cost based on usage. Connect with our account team to dive deeper into your use case and we can help provide total cost of ownership estimates for you. With our prices, we are proven to significantly lower the cost of your alternative DIY solutions.

If you have large Hudi installations and you want help operating them better, Onehouse can offer a limited one-time technical advisory/implementation service. We also offer the fully managed Lakehouse Table Optimizer to accelerate your Hudi pipelines and tables while reducing cloud infrastructure costs without rewriting any code. Onehouse engineers and developer advocates are active daily in the Apache Hudi community Slack and Github to answer questions on a best-effort basis.

Start Free Trial

Signup for a free trial to receive $1,000 free credit for 30 days